AI Code Reviews

Leveraging LLMs to Boost Productivity

Large Language Models(LLM) have been taking the world by storm. These tools now help engineers explore, refine and consider other options in the way we code. They're not perfect but they can provide a productivity boost and save quite a few key strokes when it comes to established patterns. It's only going to get better from here on out and it would be amiss to not leverage them to improve productivity, even by a little bit.

According to GitHub's research, people finished tasks up to 55% faster than those who didn't. Let's be a little bit more conservative though and assume 10-20% time savings and ignore the happiness factor because that's a bit hard to quantify.

The value add to the business is pretty high. Here's some quick assumptions and math:

- The median salary for a Senior Software Engineer at the time of writing is A$200,626 source:levels.fyi.

- They work ~38 hours a week

- Using various calculators, that's about $100/hr rounded up, before tax

50 Engineers x $100/hr x 3hrs of actual coding = $15,000/day, a productivity boost of 10%-20% means $1,500 - $3,000 per day in savings or $60,000 a month.

That's being a bit conservative and assuming people only code 3 of the 8 hours because they need time to design, think, document, have meetings etc. Really, anything you get above those few hours is a win. Adjust the number of engineers to your team size, but it would all be relative. Smaller teams have smaller funding. I simply used the engineering headcount size that I typically work with.

Experimenting on Code Reviews

The idea

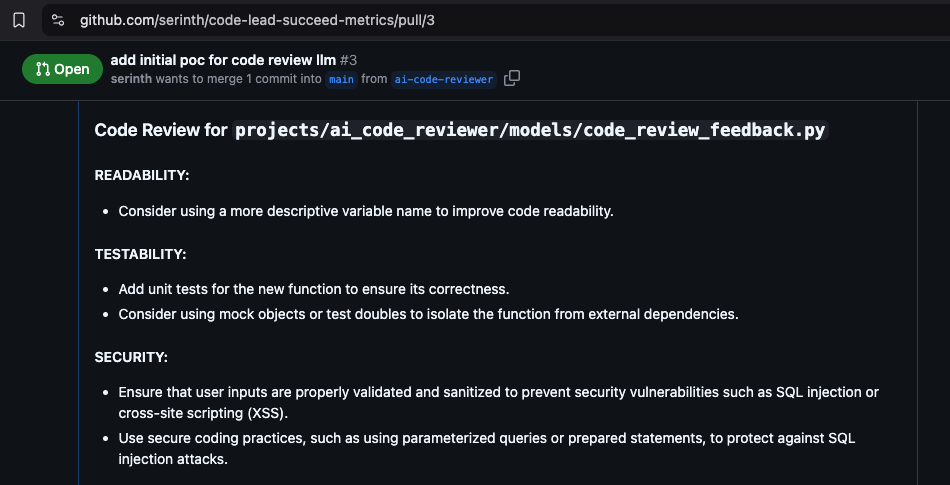

Automatically create PR reviews (in the form of comments) with an emphasis on tackling things that are normally an afterthought: Security, Testability and Readability.

Instead of using LLMs to solve specific coding problems, it's doing a quick review to make sure no gaping holes are missed in pull requests that would have negative long term multiplier effects -- usually resulting in exponentially increased effort to rectify down the road.

There are plenty of tools out there like Copilot, Cursor etc. that use agents to help solve coding problems in near real time. This example is a bit different and may even be obsolete in a month's time given the rapid iteration in the industry, but it will be used to demonstrate the thinking on how we can make LLMs work for us.

The solution

Note

Update: April 2025 LLMs have increased the context sizes significantly. e.g. Google's Gemini 2.5 Pro at time of writing has 2M context window size. I've had good success using foundational models for reviews and you can do it all in one go without chunking it down to per file as I've done below previously. For larger code bases, you can compress code bases down using something like Repomix or logically separate sections. Experiment to see what works best for you.

A webservice that listens to GitHub pull requests via a webhook. It will do a review of the code file by file and consider the three major points mentioned above. It will then spit out its reviews as a comment for the author's consideration. It uses a local LLM trained on code to do the analysis.

GitHub integration was used as an example because it's super popular and readily available with a couple of clicks to setup and documentation is clear.

A local LLM was used to reduce costs and protect the IP of the code (it doesn't feed any public LLMs). Plus we can control the egress to guarantee that it LLM wouldn't call home. There are a lot of pretty good open source models out there that have been quantized to run on less powerful hardware. I have an older Intel Macbook pro with 32GB RAM and an i7. It ran Codeqwen 7B parameter model fine. It really struggles with larger models though but the M series do a very good job of that. I tried a few models but settled on Codeqwen for the balance between performance and effectiveness for now. Some other models to consider:

Tip

Here's the reusable GitHub webhook code. It uses a local model via Ollama to do a file by file review. AI Code Reviewer

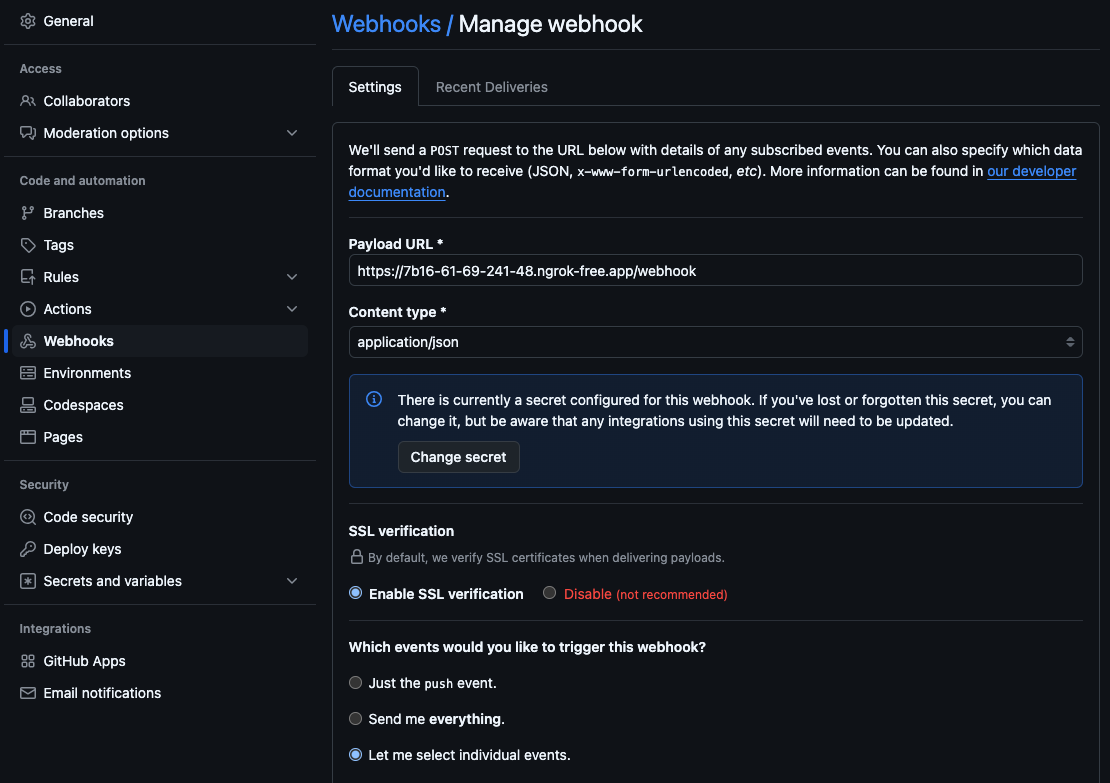

You'll need to setup the webhook first. For local development, just use a tunnel with something like Ngrok. In the GitHub repository settings, setup up something similar to this:

Make sure to select individual events and pick "Pull Requests". Otherwise you'll just get empty push messages (the default).

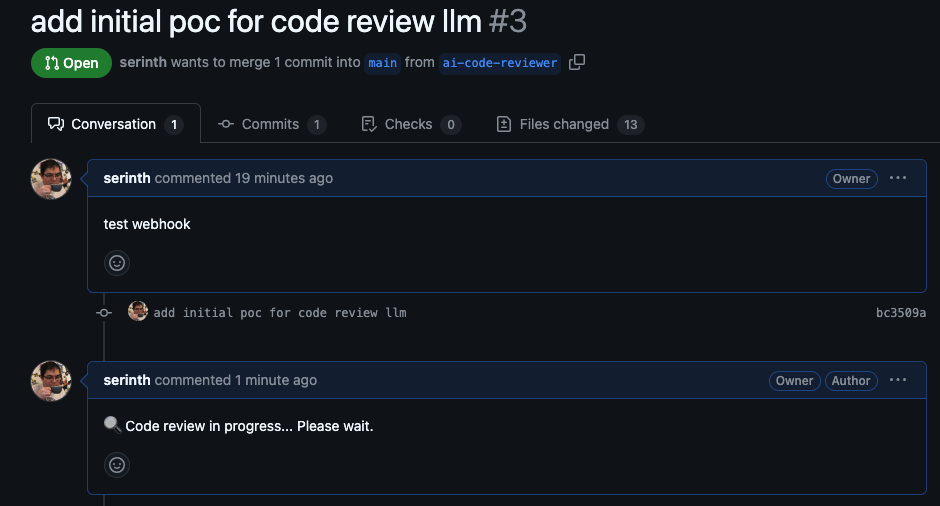

With the application running, you'll be able to see an initial comment on the PR whilst the LLM is doing its thing. The application is set up to be async and respond to GitHub's webhook immediately so that it doesn't time out:

Finally you'll get output like this:

Improvements

The repository has some mentions of improvements but it's worth repeating again for posterity.

- Try out different models. They're changing almost monthly now so you won't know what you're gonna get

- Customize and train your own model

- Change the prompt to focus on different concerns

- Modify / filter the type of files it looks at so it ignores settings files, project files etc and only looks at pure code diffs.

- Return code suggestions, not just high level gaps (though this overlaps more with the other tools)

- Maximize the model's context window by looking at more than one file at a time (or related files only based on imports)

For a low effort tool though, it doesn't hurt to have as a catch all net for the above items.

This area has plenty of room for improvement, PRs and suggestions for improvements are welcome.